Glenn Engstrand

Here I compare and contrast Kubernetes as it runs on both Amazon’s and Google’s respective clouds in terms of ease of provisioning, cost, and performance under load of a nine component system for a simple news feed application. I also share with you a secret that I learned about AWS during the research that went into the writing of this blog.

Those of you who are new to Kubernetes may not realize why it has become so important. Modern software development has advanced significantly in delivering value more quickly due mostly to the adoption of 12 factor compliant microservice architectures. Such architectures do a lot to ease the disruption inherent in the deployment of new releases of sophisticated software but at the cost of increased complexity in service interaction and governance. Docker does a great job in reducing some of that complexity by making it easy to repeatedly and consistently build and manage containers for these microservices to run in but that is solving only half the problem. How the services communicate with each other, known as service orchestration, is also complex and hard to manage. Kubernetes has risen in status as the most popular container orchestration platform. Kubernetes works with Docker in order to make it relatively easy to deploy and manage systems written in a microservice architecture.

It used to be very difficult to provision a Kubernetes cluster in Amazon Web Services. Mere mortals would struggle with open source projects such as kops or kube-aws which were still very hard to understand and use properly. Advanced tech ops wizards with deep arcane knowledge of Kubernetes internals would write custom terraform scripts. Amazon had been running a very private beta known as EKS or Amazon Elastic Container Service for Kubernetes since the beginning of the year. They made EKS available to the general public in the summer of 2018.

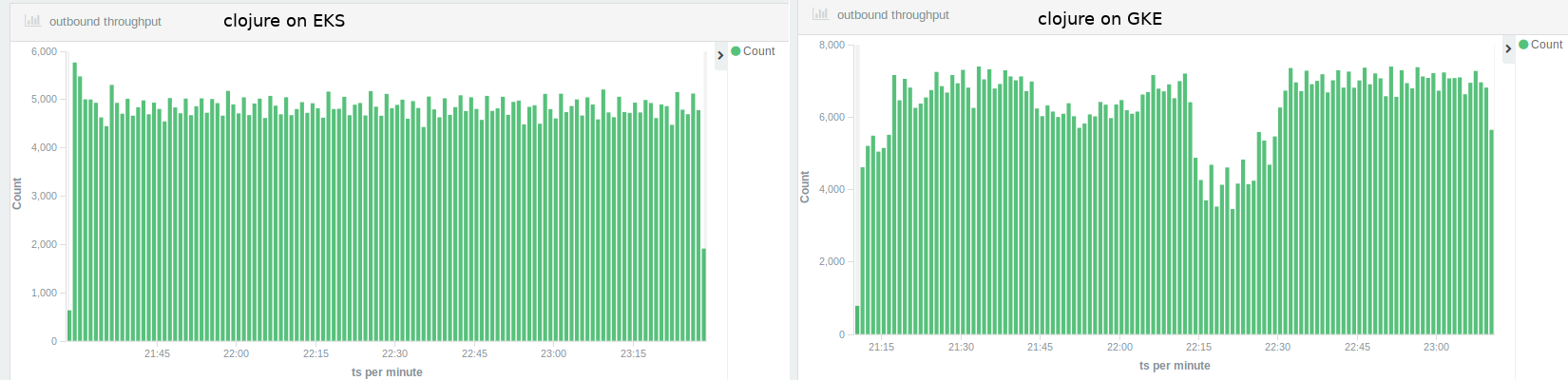

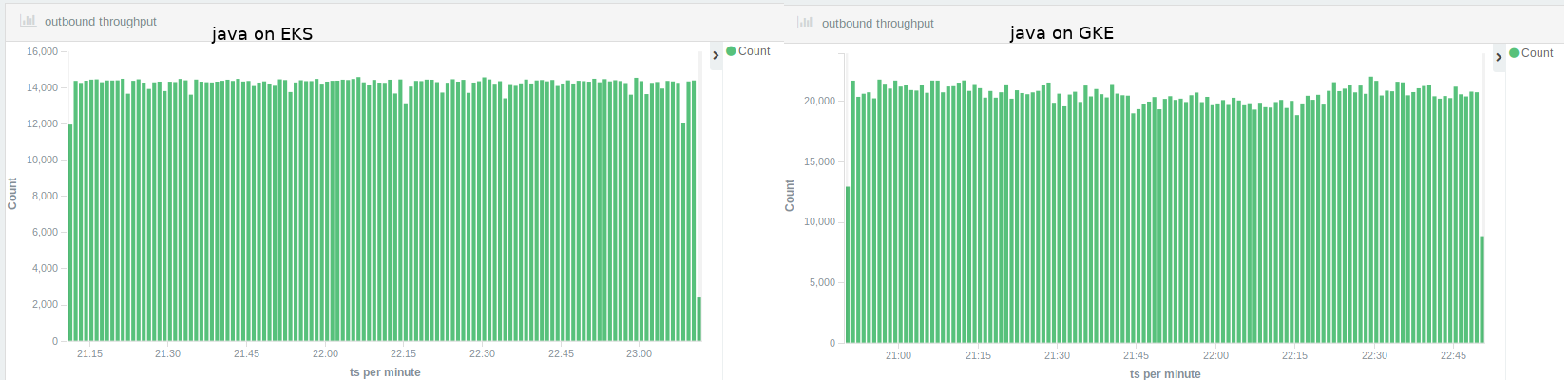

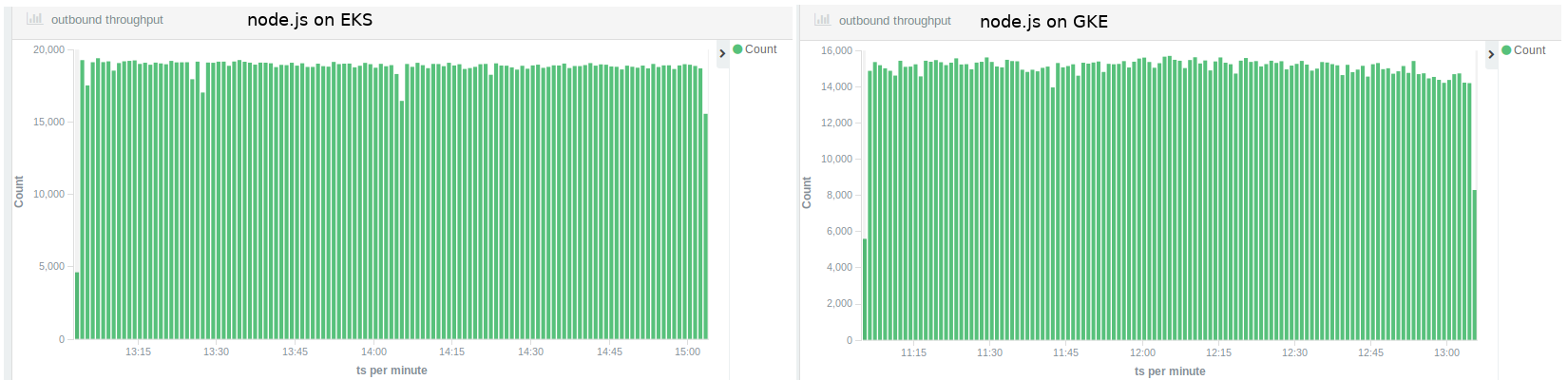

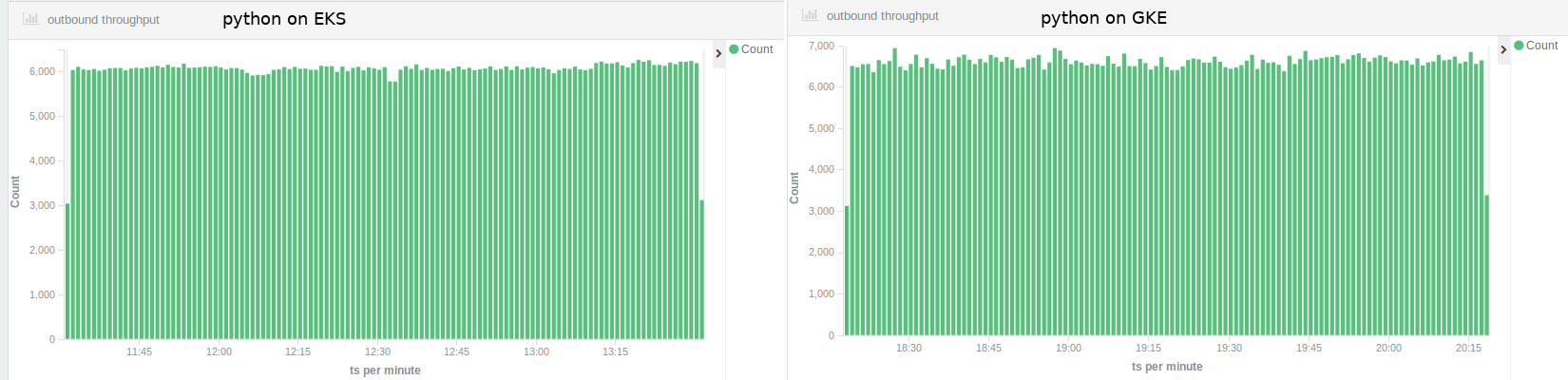

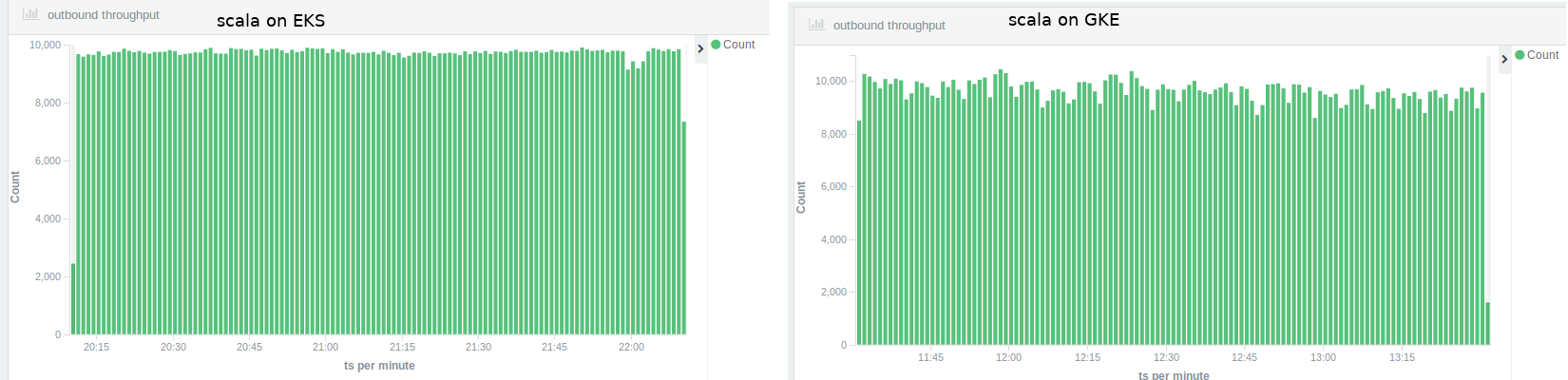

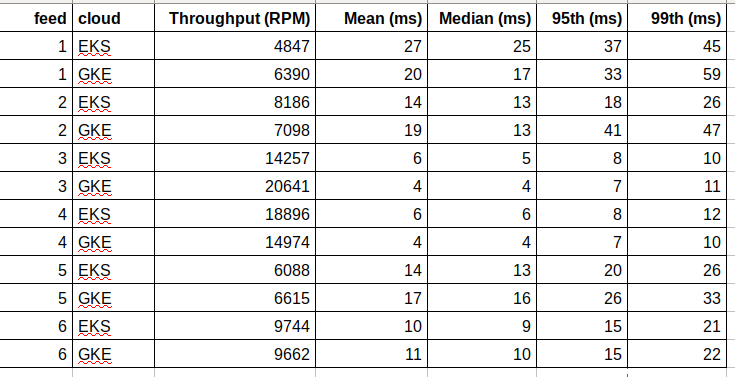

A little over a year ago, I compared Amazon’s and Google’s cloud technologies by running an implementation of the news feed service on both platforms and documenting the differences and similarities. I decided that now would be a good time to do something similar with their Kubernetes offerings. I ran two hour long load tests of the six different implementations of the news feed service on Kubernetes as provisioned in both EKS and GKE which is Google’s Kubernetes Engine. I collected the performance related results from all twelve tests and analyzed the data.

All components in the tests ran in the Kubernetes cluster itself. There was no dependency on any cloud vendor specific platform services such as RDS or Cloud SQL. There were only two differences between each test, which version of the feed microservice was deployed and on which cloud.

Provisioning a GKE cluster is a piece a cake. You fill out a single web form specifying the number and type of instances. I use 7 n1-standard-4 instances for each test. You open the Google cloud console, connect to your newly created cluster via gcloud, clone the github repo and use kubectl to create the configuration, services, deployments, and jobs from the already published manifests.

Provisioning an EKS cluster is a completely different matter altogether. It is much easier than either kops or kube-aws but it is a nine step process. You need to read a long and detailed AWS help topic very carefully and be prepared to follow it to the letter. At the time of this writing, there are plenty of gotchas here and you need to be very familiar with IAM, CloudFormation, EKS, AWS CLI, and the AWS IAM Authenticator for Kubernetes. You will need to use both the AWS Console and CLI. Some things that they list as optional are actually mandatory. You will need to install and configure their custom version of kubectl. I use 7 m4.xlarge instances for each test. After that, you use kubectl on your local machine the same way that you did with GKE in their console.

The second difference is in price. I spent $32.86 to run the six tests in EKS and $23.54 to run those same tests in GKE. Part of that difference in cost is the cheaper rates in Google but it also takes longer to provision the cluster in Amazon and that extra time is billable.

On the surface, it appeared as if the performance differences between the two clouds were quite negligible. Looking at the averages and percentiles, throughput was slightly higher on EKS and latency was marginally lower on GKE. Employing a machine learning algorithm on the performance data revealed an interesting insight. Those tests with high create outbound throughput and low create friends or participants throughput were significantly and almost exclusively running on EKS.

Why would the load test runs on those feed implementations efficient enough for high throughput on all types of requests on GKE have lower throughput for certain types of requests on EKS? What happens during a create outbound request is usually about reading one list of friends from Redis and writing on average five news feed items to Cassandra. What happens during either a create friend or create participant request is always just inserting one row (one varchar for participant or two integers for friends) into a table in MySql. Is it possible that AWS throttles MySql traffic for hosts that are not a part of RDS?

You may be wondering about how I was able to arrive at that insight about EKS. After all, it is unusual to accomplish that type of analysis with machine learning. Be sure to catch my next blog where I show you how to use machine learning to analyze microservice performance data.

The title of this post suggests that one cloud vendor might be better than another. Should you choose Amazon or Google when rolling out a Kubernetes deployment? I ask that you look at this differently. If you choose to deploy your microservices with Kubernetes, then you can feel confident that those microservices will work well on either Amazon’s or Google’s cloud. Targeting Kubernetes can reduce your risk of vendor lock in.